Thanks for sending the files.

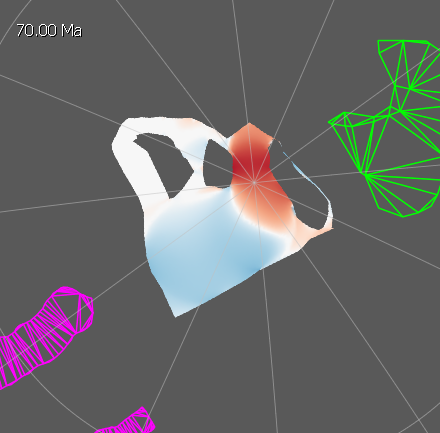

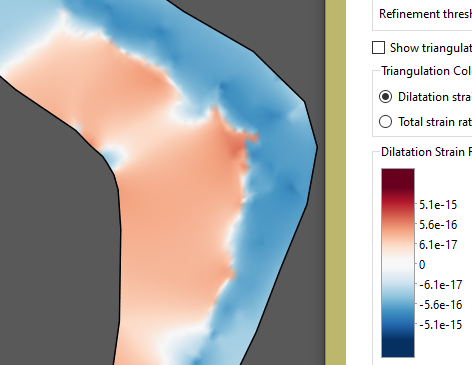

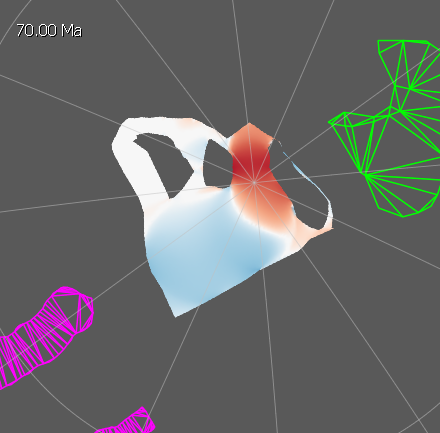

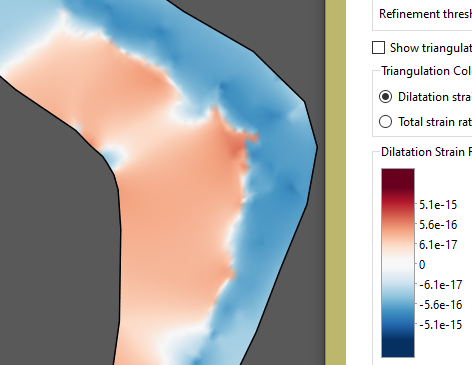

I see what you mean - there is some kind of pole issue still present - and, as you noted, changing the anchor plate ID highlights this.

Despite using a different projection as the base for the mesh triangulation (to avoid pole issues), we’re still calculating the spatial velocity gradients (ie, strain rate) in lat/lon space. I suspect that’s causing this issue. Thanks for pointing this out. I’ll need to look into changing that at some point. We do plan to replace CGAL at some point relatively soon (not that this is CGAL’s fault), so I’ll look into it then.

I think this issue is a lot more obvious in your data because your deforming networks appear to cover the entire globe (in your world-building context). For our deforming networks (in a geological context) it’s quite rare that an active network crosses a pole, so this probably just hasn’t been noticed yet.

Although I just checked our networks and can see an inactive network (a region where deformation has ceased and hence should have zero deformation) that covers the North pole…

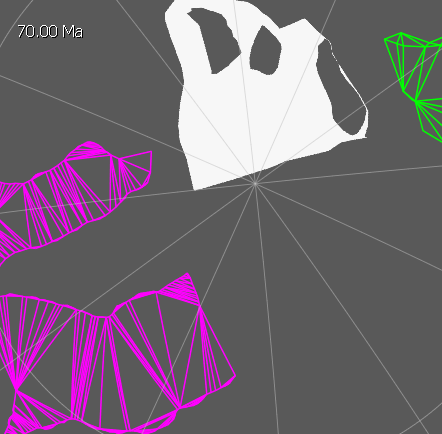

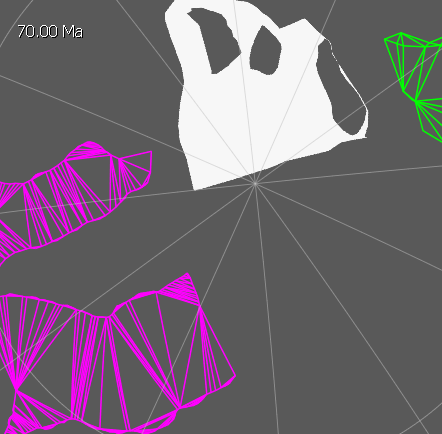

…but it should be all white (ie, no deformation). If I change the anchor plate to move it off the pole then it becomes all white as expected…

…but being an inactive network it’s probably not a big issue for us.

I think I misunderstood you before. I was thinking you were referring to generating deforming mesh points (under the Features > Generate Deforming Mesh Points menu) which are deformed through time by the deforming networks…

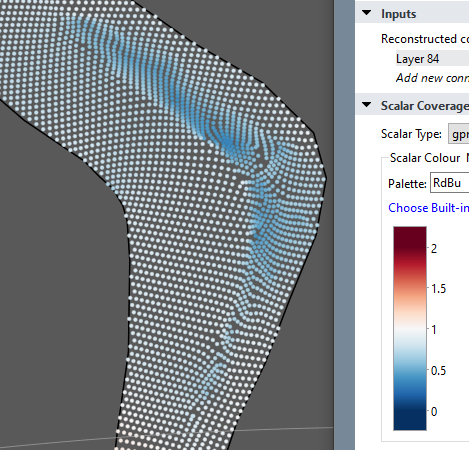

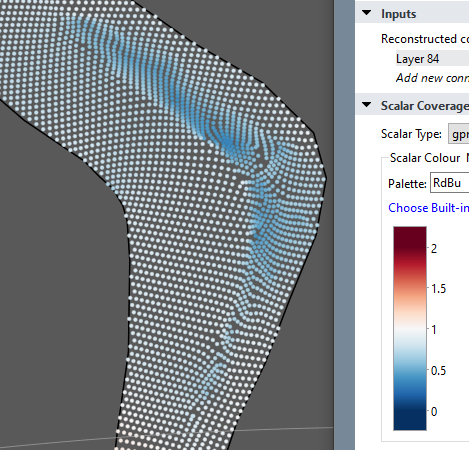

But, after taking a look at your files, I think you mean the actual creation of the deforming networks themselves and, as you mentioned, displaying them with natural neighbour smoothing…

Actually the reason for the slowdown remains the same (ie, lots of points sampling the Delaunay mesh). It’s just that, in this case, the points are all the “raster” locations in the bottom screenshot (so that you can visualise the variation in strain rate) versus the explicit points in the screenshot above it.

In your case you actually have deforming meshes covering the entire globe, which I didn’t anticipate, so that would definitely slow things down. Also, as you noted, you can turn off fill mode or select no smoothing and it will be much faster.